Archive:

Finally. I’ve got around to some game-play programming…

My natural instinct when approaching this is to getting busy building a finite state machine, the core of which can be shared over the AI in my game, and then start building some instances to see what I like and what I don’t.

UE4, unsurprisingly, is reasonably opinionated about how you should approach this and has its own system: Behaviour Trees. I’ve seen the BT system mentioned by quite a few devs over the last couple of months, and read bits and pieces about how to use it prior to rolling up my sleeves. I was quite excited to jump-in, but over the course of the last ten days I think I’ve gone through at least half of the seven stages of grief just trying to find a way of working with it that I can live with…

One reason for this — and the thing that consistently annoys me about learning modern tools — is the absolute piss-poor state of documentation. I will never understand why people think scrubbing through hours of video is better than concise, written explanations of something, but there you go. Good technical documentation is a dying breed.

The BT system does do slightly better than expected in this regard, as there’s a relatively skinny HOWTO that walks you through the basics, but A) It’s blueprint orientated and B) AI also has to drive animation, and audio, and oh-my-god-stuff-needs-to-be-replicated-and-why-the-fuck-isn’t-this-working-what-the-fuck-simple-thing-have-I-missed-now sob. Etc.

Ok, I’m slightly exaggerating, but after a day of use my initial impression of the whole thing was that it was a teensy bit over engineered. Not designed for me. And I didn’t like it.

I’ve slightly changed my mind since…

--

My simple starter AI character has a few states:

Some of this information needs to be passed to the animation blueprint (being at attention, for example, or aiming at something) so the correct set of animations get played. Some of this information needs to be replicated, so clients see the correct thing.

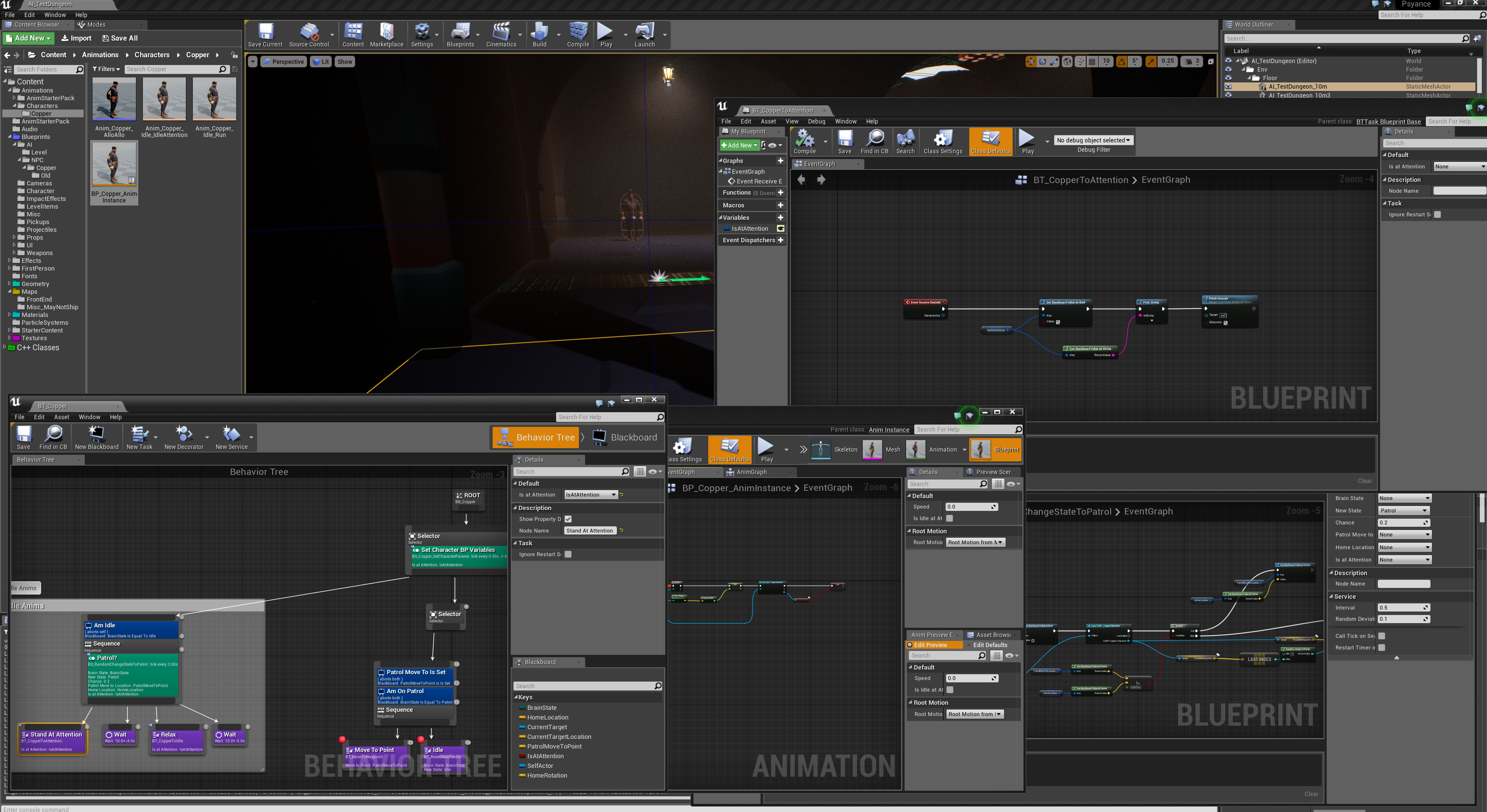

A Behaviour Tree’s Blackboard is basically designed to support this, being a slightly decoupled place to store data that a BT uses to make decisions, and that the rest of your code can then modify & grab, as required. But that means touch-points in multiple places; the character blueprint, custom events to populate the animation blueprint, the AI Controller… in addition to the things that make the BT tick: the functions that make decisions and the services that perform checks.

I really don’t like this. Debugging this stuff is a fucking nightmare. You end up with windows and breakpoints everywhere, and the relevant data is spread too far. I like my parameters in one place and I like to be able to quickly read state at runtime, preferably in one place, so my first foray into this wonderful world (using blueprints only) gave me the heebie jeebies, and worse, didn’t end up working correctly. I have no idea why.

By this point I’ve gone through the first three stages of grief, although mostly “Anger and Frustration”. So I decided in the “Depression” stage to have a go at a pure C++ AI, and check out what else the engine had to offer. This lead me to the AI Perception system, which on paper looks great: Sight, Sound, Damage and Touch events for your AI, just by adding a simple component. Woo! And at least half of that system works! The rest, largely undocumented, doesn’t appear to, but it’s labelled WIP so this is either my fault, or there’s some arcane magic that I’m missing.

After an hour I really couldn’t be arsed stepping through the code to work out which, so I reverted back to the old Pawn Sensing stuff. This clearly isn’t as good, and it doesn’t provide anywhere near as fancy debugging output (which I’m a sucker for) but it works, and I could move on.

After a day I had my FSM, a little AI dude, a derivation of the player weapons that the AI could use to kill me, and everything was working in co-op with a connected second player. Hurrah! Except that’s only the tip of the iceberg. This stuff only looks good, or becomes convincing, when the transitions between the states have some range of probability, a bit of variation, and reactions can be deferred a little. This means adding transitional states, which means FSMs in code quickly become unwieldy. Adding time delays to state changes also makes things harder to read…

I wasn’t excited about carrying this forward and then having to debug it at some point in the future, and I do want something a tiny bit more advanced that Doom’s AI, so on reflection, straight C++ didn’t seem like the best bet either.

The upward turn (grief stage 5, apparently) was when I worked out how to use BTs with C++. Even moving the tasks — operations in a BT that do something to the character or it’s data — to C++ is a massive win. I can debug my character, my controller and individual AI tasks within Visual Studio, with a decent call-stack and inspector, and use the BT to add in all the little random waits, variations, or sub-routes, without clogging up the code. Things immediately started looking better.

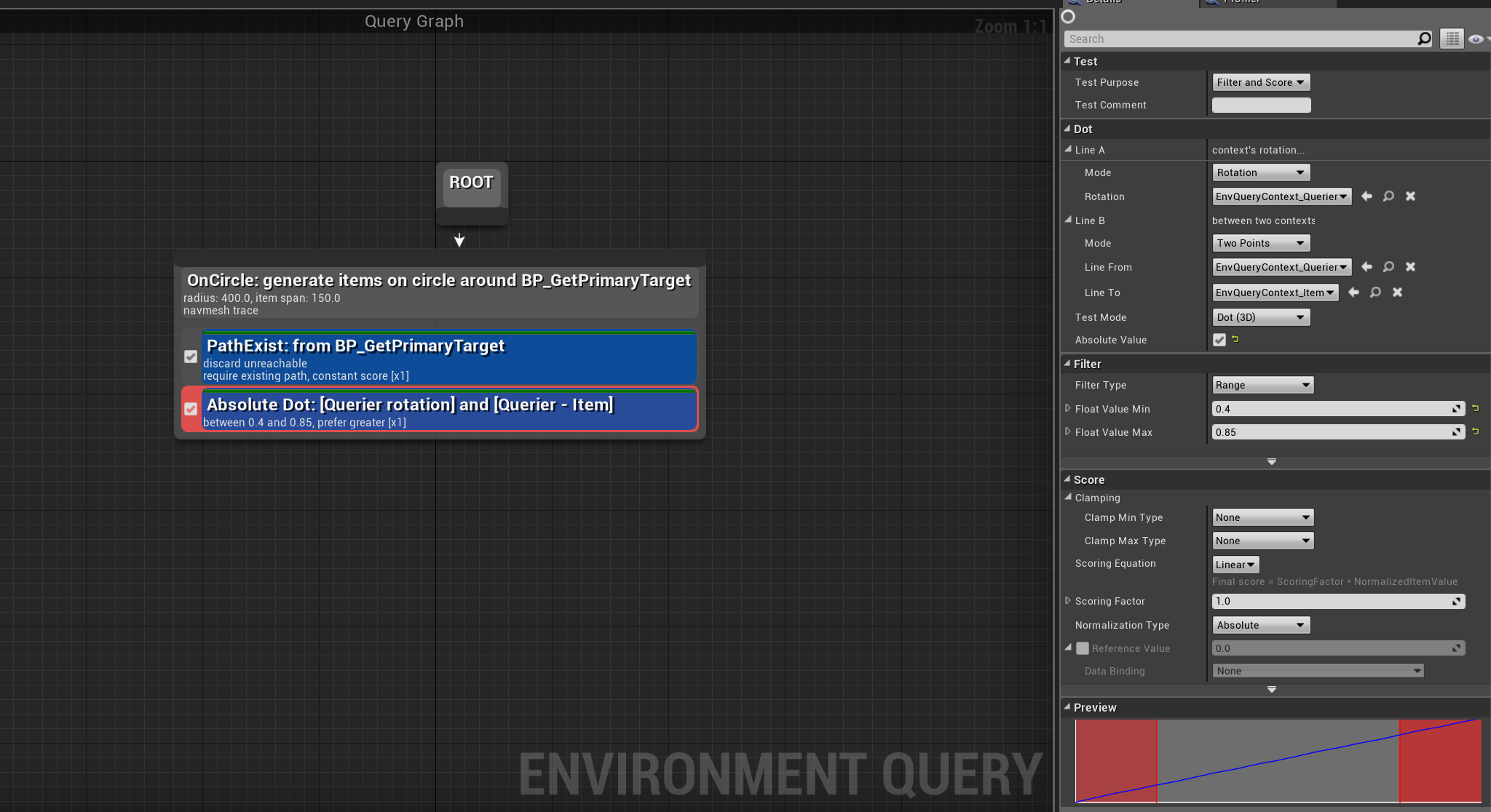

Behaviour Trees also make the Environment Query System a tad easier to use and it seems like something that’s potentially cool, but I’ll be honest, I’m still on the up-hill climb with this. Have a look for yourself.

Spot the system written by a coder, for a coder.

So far I’ve been able to use the EQS to generate random places to look for a player when the AI loses them, and random locations around the player, so the AI isn’t a stationary target when engaging. But I need to spend more time to actually understand how to use this system properly. Having the AI run for cover, or flank the player, would be cool and eminently doable.

So where am I now?

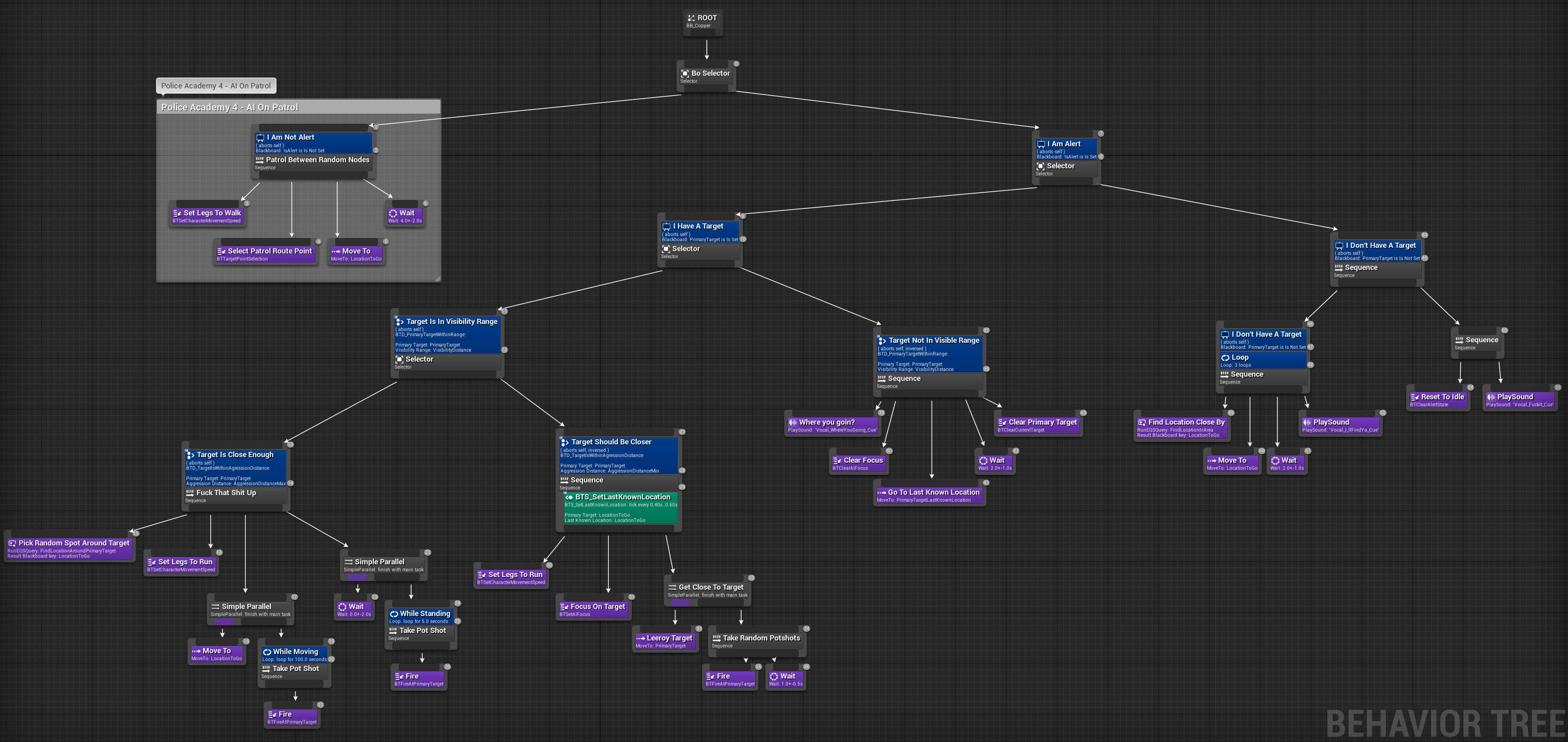

Well, the header image shows the BT I ended up with after all of this experimentation. One thing that’s abundantly clear is that using a BT to sense and make state decisions dynamically, each frame, isn’t the way to go. The stack of conditionals you end up with prior to running sequences and progressing down the tree is messy, and still not fun to debug. I’m going to re-do this next week, but with a stored “current state” that pulls from an enumerated list in the Blackboard. I’ll combine the pawn-sensing, via the AI controller, with the simple tests in the BT to change state at given circumstances, and write a small set of methods in the AI controller to set the animation params, replicate, and /or call multicast stuff for clients.

I think this will reduce the surface area for debugging, make the BT itself a bit cleaner, and leave me with a small collection of C++ BT Tasks that I can re-use.

But those could be famous last words of stage 7; acceptance and hope.

Musings, random thoughts, work in progress screenshots, and occasional swears at Unreal Engine's lack of documentation -- this is a rare insight into what happens when a supposedly professional game developer plans very little up-front, and instead follows where the jokes lead them.

Journal IndexFriends:

If you like any of my work, please consider checking out some of the fantastic games made by the following super talented people: